Why 2025 feels different

If you’re a video creator in 2025, you’ve probably experienced the same little shock: tasks that once chewed hours of time — finding the best clip from a 90-minute livestream, cropping an interview into vertical format with readable captions, or patching two mismatched takes — can now be done in minutes with AI. This year feels different because AI in video editing has moved from “assistive” features to genuine creative collaborators: generating new pixels and audio, reliably extracting platform-ready highlights, and even translating and dubbing at scale. These capabilities aren’t perfect, but they’re production-ready in many real-world workflows — and they’re changing how teams plan, shoot, and iterate.

In this post we’ll cover: the headline trends that defined 2025, how generative and text-to-video tech work and where they shine, the AI-assisted workflow upgrades you can adopt today, real-world use cases, and the ethical and practical guardrails you should build into your pipeline.

What changed in 2025

1. Generative clip extension and inpainting became production-capable

Generative models can now extend short clips by filling in new frames and even synthesize matching ambient audio so an extended shot reads naturally. Adobe’s “Generative Extend” (powered by Firefly) and similar offerings let editors add up to several seconds of seamless footage and background sound in 4K in a single pass — a capability that was experimental a year ago and is now shipping in mainstream tools.

2. Text-to-video and image-to-video moved from experiments to practical short-form output

Models like Runway’s Gen series and Pika’s generators produce short, coherent clips from prompts or reference images. These tools are most useful for concept prototypes, motion tests, and stylized short content where perfect photorealism isn’t required but speed and iteration matter. Runway’s Gen-3 (and Gen-2 before it) demonstrated significant gains in fidelity and motion coherence, pushing text-to-video into actual pre-production and social-clip use.

3. AI became the default “editor-in-chief” for social repurposing

Automatic highlight detection, auto-captions, scene detection, and smart aspect-ratio conversions let creators turn long-form content into dozens of platform-ready clips in minutes, not days. Tools such as OpusClip, CapCut, and VEED have matured feature sets that automate formatting, captions, and B-roll insertion for TikTok, Reels, and Shorts.

4. Multimodal media intelligence and search improved footage retrieval

“Find me the clip where the guest says ‘we launched’ and points at the screen” is now a practical query in some professional editors’ toolboxes. Adobe’s Media Intelligence and other search features index audio, faces, and descriptive labels so teams find usable footage dramatically faster.

5. Democratization — pro-grade features, but credit/subscription economics

Many generative and advanced features are available to creators, but commercial use often requires paid credits or enterprise plans (e.g., Firefly credits for video generation). That makes capabilities widely reachable while introducing cost-management tradeoffs for high-volume teams.

Deep dive — Generative & text-to-video technologies

What “generative” means in modern video editing

Generative video here refers to models that synthesize new pixel and/or audio content based on context: extending frames (inpainting), creating intermediate frames (interpolation), or generating entirely new short clips from text or image prompts. This goes beyond upscaling or simple interpolation because the model “imagines” what should appear in new frames, guided by scene content, lighting, and motion cues.

Leading capabilities and practical examples

Generative Extend (frame + ambient audio extension)

Now shipping in Premiere Pro, Generative Extend uses Firefly video models to expand the edges of a clip or extend its duration while maintaining lighting and motion continuity. Editors use it to avoid awkward jump cuts, lengthen B-roll to match a VO, or fix timing without reshoots. This is powerful for last-minute fixes in ads and marketing videos.

Text-to-video & image-to-video

Runway’s Gen family (Gen-2, Gen-3 Alpha) and Pika’s tools allow creators to generate short sequences from descriptive prompts or a still image. These are great for quick previsualization, stylized social posts, or concept animation where you want to explore camera moves or moods before committing budget to VFX. Keep expectations realistic: outputs are best used for short clips (a few seconds up to ~10 seconds at higher fidelity), storyboarding, or assets to be composited later.

Video-to-video and style transfer

Applying motion characteristics, color grading, or filmic stylings from one clip to another is now much faster. Runway and other services let you transfer a look or motion pattern to source footage to get a consistent visual language across shots without manual grade work.

How to prompt and test effectively

- Start small: generate 1–3 second tests to iterate on prompts.

- Be explicit: mention camera motion, angle, time of day, mood, and format (e.g., “vertical, 9:16, 5s, handheld slight pan, golden-hour lighting”).

- Use reference frames: when possible, feed a still or short clip to guide color, framing, or style.

- Mask and composite: generative outputs are often strongest when used as elements — background fills, extended B-roll, or stylized overlays — rather than full-scene replacements.

Limitations to watch for

Generative systems can drift on long sequences, produce texture or motion artifacts on complex interactions (fast camera moves, dense crowds), and sometimes hallucinate small but obvious errors (mismatched eyelines, impossible reflections). Cost and credit usage for long or high-resolution outputs remains a production consideration.

Deep dive — AI-assisted editing workflows & production speedups

AI tools in 2025 are not just “neat features” — they change where humans spend time in the edit.

Time-savers that reshaped workflows

Auto-cut and highlight reels

Long-form content — podcasts, webinars, game streams — can be transformed into dozens of short clips automatically. OpusClip’s Automatic Clip Maker analyzes engagement signals and extracts viral-ready moments, optionally adding captions and AI-generated B-roll. For creators who repurpose podcasts into social clips, that’s a one-click multiplier.

Automated captions, translation, and dubbing

Auto-captioning is standard, but AI dubbing and translation (with lip-syncing assistance) have matured. Adobe Firefly’s dubbing features can translate captions into over two dozen languages and generate dubbed audio that lines up reasonably well with lip movement — a huge time saver for localization teams.

Background removal & real-time replacement

No-green-screen background removal is now common in post and sometimes live tools. CapCut, Runway, and other SaaS provide fast background replacement for talking-head content, product shots, and quick promos, removing a persistent production bottleneck.

Collaboration and search improvements

Cloud-based media intelligence indexes footage with descriptive metadata. Instead of manually scrubbing hours of drives, teams can search for spoken phrases, specific objects, faces, or camera actions. That transforms discovery from manual to semantic search, cutting pre-edit prep time dramatically. Adobe’s Media Intelligence is an example of this trend landing in professional NLEs.

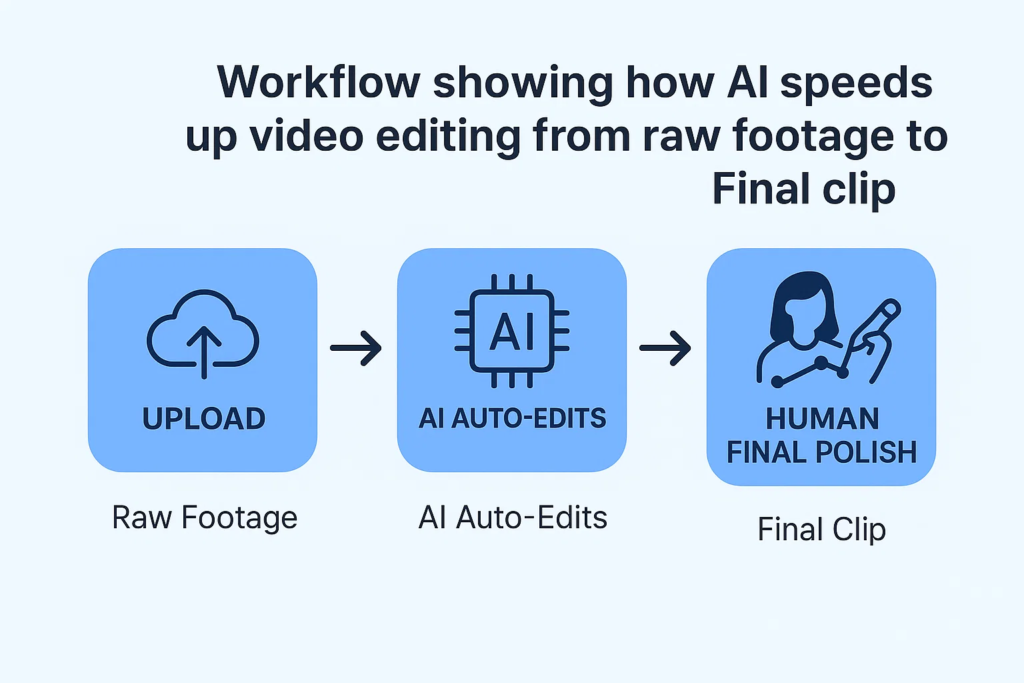

Production pipelines: AI + human balance

- Let AI do the heavy lifting: silent cuts, rough highlights, captioning, color matching, and proxy generation.

- Humans own story and tone: pacing, transitions, key performance edits, and final grade should be human-validated.

- QA pass is essential: always check AI-generated frames for artifacts, translated copy for nuance, and dubbing for acceptable intonation.

Real-world use cases & examples

1. Social creator repurposing a livestream into vertical clips

A creator uploads a 90-minute livestream to OpusClip. The tool extracts 12 highlight clips, adds captions, and reformats them for TikTok and YouTube Shorts. The creator spends 15–30 minutes batch-editing the generated clips (tweaking captions, swapping brand assets) and publishes three viral posts the same day — something that used to take a full day of manual editing.

2. Indie filmmaker prototyping a VFX-heavy scene

Before greenlighting expensive VFX work, a director asks for mood and motion tests. Runway generates quick 8–10 second sequences from prompt and reference images that demonstrate camera movement and color grading. The director iterates on pacing and framing without tying up the VFX team — a cheaper, faster previsualization loop.

3. Marketing team localizing a campaign

A marketing team uses Adobe’s AI dubbing and caption translation to produce localized versions of a product announcement in 12 languages. Generative Extend fixes a couple of short timing mismatches without reshoots, and the team launches localized creatives across regions faster and cheaper than an all-human workflow. The result: significantly reduced time-to-market for region-specific campaigns.

Tool quick-facts (one-line)

- Runway (Gen-3): strong for short text-to-video prototypes and style transfer.

- Adobe Premiere Pro (Generative Extend/Firefly): frame extension, media intelligence, and translation in professional NLE.

- OpusClip: automated highlight detection and platform-ready clip generation.

- CapCut: accessible auto-editing features and AutoCut for creators focused on rapid social output.

- Wisecut: AI-driven silent-cut removal and storyboard-based editing for talking-head content.

Challenges, ethics & best practices

As useful as these tools are, they introduce new responsibilities.

Deepfakes & authenticity risk

Generative tools can create highly convincing content. That’s a boon for creativity and a risk for misinformation. Label AI-generated material where relevant and consider watermarking or provenance metadata to maintain trust with audiences. Platforms and publishers are starting to expect disclosure for synthetic content.

Copyright and model training concerns

Many generative models are trained on large web-scale datasets. Questions about copyrighted source material and ownership of outputs are active legal debates; teams should consult legal counsel for commercial use that may border on derivative content. Vendors like Adobe emphasize licensed or public-domain training sources for their commercial models, but due diligence is advised.

Hallucinations and quality control

Generative outputs sometimes invent details (wrong props, inconsistent reflections, lip-sync drift). Always run a human QC pass, especially for client work and deliverables that represent a brand.

Privacy and consent

Generating or dubbing a real person’s likeness/voice requires consent. Even when AI makes modest edits, think through permissions and announce when AI materially changed voice or appearance.

Practical best-practice checklist

- Preserve originals and version every AI pass.

- Keep a short “AI log” inside project files: tool name, model version, prompts, credits used.

- Use AI for repeatable tasks, not final creative judgment.

- Disclose synthetic content when it affects representation or trust.

- Budget for credits and test at production scale before committing to large volumes.

Practical how to: A 5 minute Generative Extend test (mini tutorial)

- Pick a short B-roll clip (3–6s) in Premiere Pro.

- Open Generative Extend, set target length + ambient audio extension, and choose a safety/quality profile.

- Run a one-second extension test first to verify lighting/motion match.

- If output looks good, extend to required length and use masks to confine edits to background or peripheral elements.

- Always scrub frame-by-frame and render a short MP4 for playback on target device.

(If you use Firefly credits for longer extensions, note the credit cost before running long renders.)

Conclusion — What creators and teams should do next

AI in video editing in 2025 is no longer a toy: it’s a practical lever that speeds iteration, lowers cost for many tasks, and enables rapid prototyping. That said, creative judgment, narrative sense, and ethical oversight remain distinctly human strengths. If you’re a creator or production lead, start by integrating one or two AI passes into your pipeline (auto-caption + highlight extraction is a low-friction start), add a mandatory human QA stage, and experiment with generative elements for B-roll and previsualization before relying on them for final deliverables.